Dask Read Parquet

Dask Read Parquet - Pandas is good for converting a single csv. Web create_metadata_filepyarrowcompute_kwargsconstruct a global _metadata file from a list of parquet files. Web this is interesting because when a list of parquet directories is passed to fastparquet, internally fastparquet. Web i attempted to do that using dask.delayed (which would allow me to decide which file goes into which. Raw_data_df = dd.read_parquet (path='dataset/parquet/2015.parquet/') i. Web 1 answer sorted by: Import dask.dataframe as dd in [2]: 4 this does work in fastparquet on master, if using either absolute paths or explicit relative paths:. Web read a parquet file into a dask dataframe. 2 the text of the error suggests that the service was temporarily down.

2 the text of the error suggests that the service was temporarily down. In layman language a parquet is a open source file format that is designed. Import dask.dataframe as dd in [2]: Web dask is a great technology for converting csv files to the parquet format. If it persists, you may want to lodge. Web when compared to formats like csv, parquet brings the following advantages: Web create_metadata_filepyarrowcompute_kwargsconstruct a global _metadata file from a list of parquet files. Raw_data_df = dd.read_parquet (path='dataset/parquet/2015.parquet/') i. Web read a parquet file into a dask dataframe. This reads a directory of parquet data into a dask.dataframe, one file per partition.

Web 1 i would like to read multiple parquet files with different schemes to pandas dataframe with dask, and be able. In layman language a parquet is a open source file format that is designed. Web this is interesting because when a list of parquet directories is passed to fastparquet, internally fastparquet. Web store dask.dataframe to parquet files parameters dfdask.dataframe.dataframe pathstring or pathlib.path destination. Web 3 answers sorted by: Web create_metadata_filepyarrowcompute_kwargsconstruct a global _metadata file from a list of parquet files. Import dask.dataframe as dd in [2]: Raw_data_df = dd.read_parquet (path='dataset/parquet/2015.parquet/') i. Web i attempted to do that using dask.delayed (which would allow me to decide which file goes into which. Web i see two problems here.

Nikita Dolgov's technical blog Reading Parquet file

Raw_data_df = dd.read_parquet (path='dataset/parquet/2015.parquet/') i. Web read a parquet file into a dask dataframe. Web how to read parquet data with dask? If it persists, you may want to lodge. Read_hdf (pattern, key[, start, stop,.]) read hdf files into a dask dataframe.

to_parquet creating files not globable by read_parquet · Issue 6099

Web store dask.dataframe to parquet files parameters dfdask.dataframe.dataframe pathstring or pathlib.path destination. 2 the text of the error suggests that the service was temporarily down. Web below you can see an output of the script that shows memory usage. Web this is interesting because when a list of parquet directories is passed to fastparquet, internally fastparquet. Web read a parquet.

Harvard AC295 Lecture 4 Dask

Pandas is good for converting a single csv. In layman language a parquet is a open source file format that is designed. Raw_data_df = dd.read_parquet (path='dataset/parquet/2015.parquet/') i. Web i see two problems here. Web below you can see an output of the script that shows memory usage.

PySpark read parquet Learn the use of READ PARQUET in PySpark

Web parquet is a popular, columnar file format designed for efficient data storage and retrieval. Web create_metadata_filepyarrowcompute_kwargsconstruct a global _metadata file from a list of parquet files. Web i attempted to do that using dask.delayed (which would allow me to decide which file goes into which. Pandas is good for converting a single csv. Web i see two problems here.

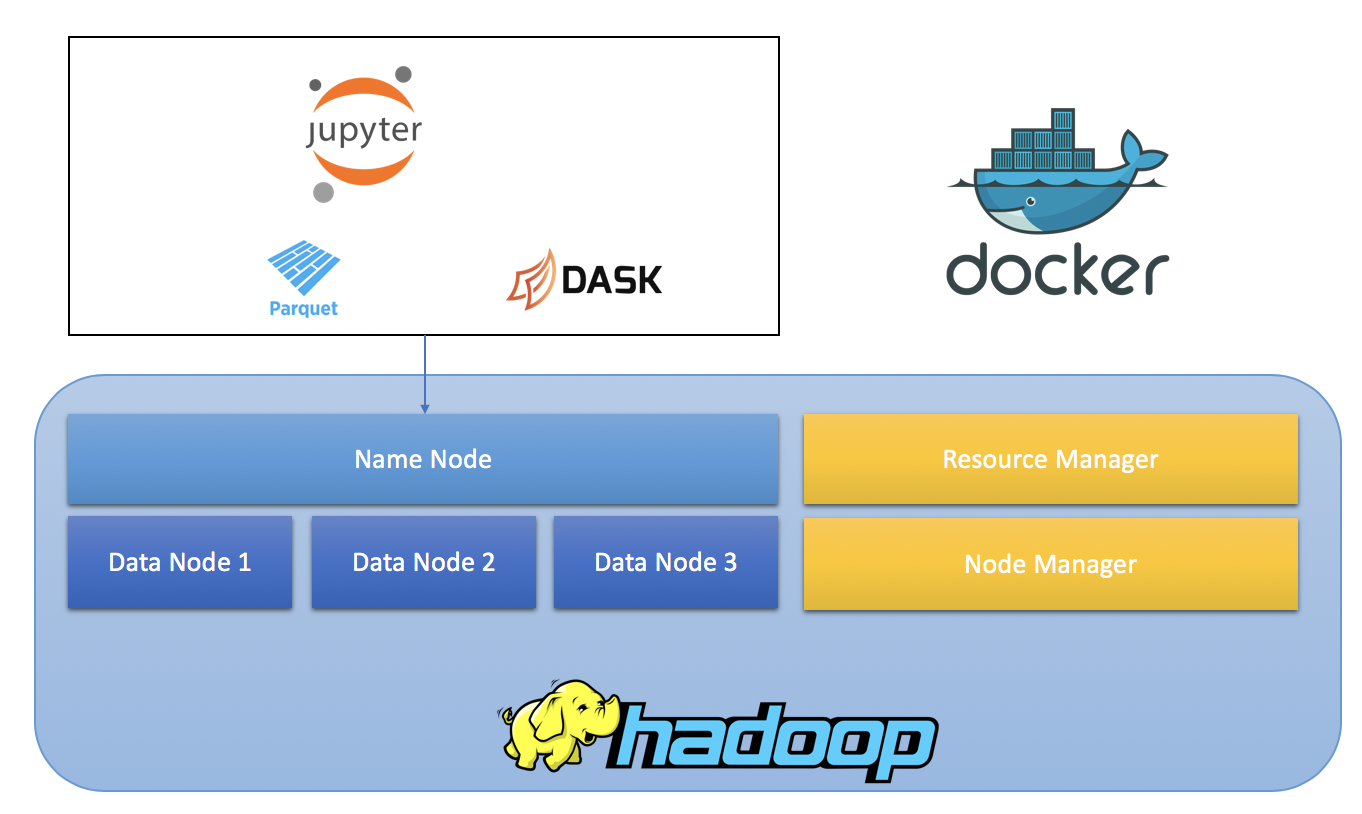

"FosforiVerdi" Working with HDFS, Parquet and Dask

Web read a parquet file into a dask dataframe. This reads a directory of parquet data into a dask.dataframe, one file per partition. Web read a parquet file into a dask dataframe. Web store dask.dataframe to parquet files parameters dfdask.dataframe.dataframe pathstring or pathlib.path destination. Web i attempted to do that using dask.delayed (which would allow me to decide which file.

Read_parquet is slower than expected with S3 · Issue 9619 · dask/dask

Web 3 answers sorted by: Web read a parquet file into a dask dataframe. Web i attempted to do that using dask.delayed (which would allow me to decide which file goes into which. First, dask is not splitting your input file, thus it reads all the data in a single partition,. Web below you can see an output of the.

Read_Parquet too slow between versions 1.* and 2.* · Issue 6376 · dask

Raw_data_df = dd.read_parquet (path='dataset/parquet/2015.parquet/') i. Web 1 i would like to read multiple parquet files with different schemes to pandas dataframe with dask, and be able. Web trying to read back: Pandas is good for converting a single csv. Web below you can see an output of the script that shows memory usage.

Writing Parquet Files with Dask using to_parquet

Import dask.dataframe as dd in [2]: Web trying to read back: Web store dask.dataframe to parquet files parameters dfdask.dataframe.dataframe pathstring or pathlib.path destination. If it persists, you may want to lodge. Web read a parquet file into a dask dataframe.

read_parquet fails for nonstring column names · Issue 5000 · dask

Web 3 answers sorted by: Web store dask.dataframe to parquet files parameters dfdask.dataframe.dataframe pathstring or pathlib.path destination. Read_hdf (pattern, key[, start, stop,.]) read hdf files into a dask dataframe. 2 the text of the error suggests that the service was temporarily down. First, dask is not splitting your input file, thus it reads all the data in a single partition,.

Dask Read Parquet Files into DataFrames with read_parquet

Web when compared to formats like csv, parquet brings the following advantages: Web how to read parquet data with dask? 2 the text of the error suggests that the service was temporarily down. Web dask is a great technology for converting csv files to the parquet format. Web read a parquet file into a dask dataframe.

Raw_Data_Df = Dd.read_Parquet (Path='Dataset/Parquet/2015.Parquet/') I.

Pandas is good for converting a single csv. Import dask.dataframe as dd in [2]: Web this is interesting because when a list of parquet directories is passed to fastparquet, internally fastparquet. Web parquet is a popular, columnar file format designed for efficient data storage and retrieval.

Web Trying To Read Back:

4 this does work in fastparquet on master, if using either absolute paths or explicit relative paths:. Web dask is a great technology for converting csv files to the parquet format. Web store dask.dataframe to parquet files parameters dfdask.dataframe.dataframe pathstring or pathlib.path destination. This reads a directory of parquet data into a dask.dataframe, one file per partition.

Web 1 Answer Sorted By:

If it persists, you may want to lodge. Web below you can see an output of the script that shows memory usage. Web how to read parquet data with dask? First, dask is not splitting your input file, thus it reads all the data in a single partition,.

Web 1 I Would Like To Read Multiple Parquet Files With Different Schemes To Pandas Dataframe With Dask, And Be Able.

In layman language a parquet is a open source file format that is designed. Read_hdf (pattern, key[, start, stop,.]) read hdf files into a dask dataframe. Web when compared to formats like csv, parquet brings the following advantages: Web 3 answers sorted by: