How To Read Csv File From Dbfs Databricks

How To Read Csv File From Dbfs Databricks - You can work with files on dbfs, the local driver node of the. Web method #4 for exporting csv files from databricks: Web part of aws collective 13 i'm new to the databricks, need help in writing a pandas dataframe into databricks local file. Web in this blog, we will learn how to read csv file from blob storage and push data into a synapse sql pool table using. My_df = spark.read.format (csv).option (inferschema,true) # to get the types. The local environment is an. The input csv file looks like this: Web june 21, 2023. Web also, since you are combining a lot of csv files, why not read them in directly with spark: Web apache spark under spark, you should specify the full path inside the spark read command.

Web overview this notebook will show you how to create and query a table or dataframe that you uploaded to dbfs. You can work with files on dbfs, the local driver node of the. Web in this blog, we will learn how to read csv file from blob storage and push data into a synapse sql pool table using. Web a work around is to use the pyspark spark.read.format('csv') api to read the remote files and append a. The databricks file system (dbfs) is a distributed file system mounted into a databricks. Web method #4 for exporting csv files from databricks: Web part of aws collective 13 i'm new to the databricks, need help in writing a pandas dataframe into databricks local file. Web how to work with files on databricks. My_df = spark.read.format (csv).option (inferschema,true) # to get the types. The local environment is an.

Web overview this notebook will show you how to create and query a table or dataframe that you uploaded to dbfs. Web a work around is to use the pyspark spark.read.format('csv') api to read the remote files and append a. The local environment is an. Web also, since you are combining a lot of csv files, why not read them in directly with spark: Web you can use sql to read csv data directly or by using a temporary view. The input csv file looks like this: Use the dbutils.fs.help() command in databricks to. The final method is to use an external. Web 1 answer sort by: Web this article provides examples for reading and writing to csv files with azure databricks using python, scala, r,.

How to Read CSV File into a DataFrame using Pandas Library in Jupyter

Web a work around is to use the pyspark spark.read.format('csv') api to read the remote files and append a. My_df = spark.read.format (csv).option (inferschema,true) # to get the types. Web how to work with files on databricks. Web apache spark under spark, you should specify the full path inside the spark read command. Web part of aws collective 13 i'm.

Databricks Read CSV Simplified A Comprehensive Guide 101

Web overview this notebook will show you how to create and query a table or dataframe that you uploaded to dbfs. Web june 21, 2023. The local environment is an. Web 1 answer sort by: Web method #4 for exporting csv files from databricks:

Databricks File System [DBFS]. YouTube

Web also, since you are combining a lot of csv files, why not read them in directly with spark: You can work with files on dbfs, the local driver node of the. The final method is to use an external. Web apache spark under spark, you should specify the full path inside the spark read command. Web 1 answer sort.

How to read .csv and .xlsx file in Databricks Ization

Web you can write and read files from dbfs with dbutils. The databricks file system (dbfs) is a distributed file system mounted into a databricks. Web you can use sql to read csv data directly or by using a temporary view. Web method #4 for exporting csv files from databricks: The input csv file looks like this:

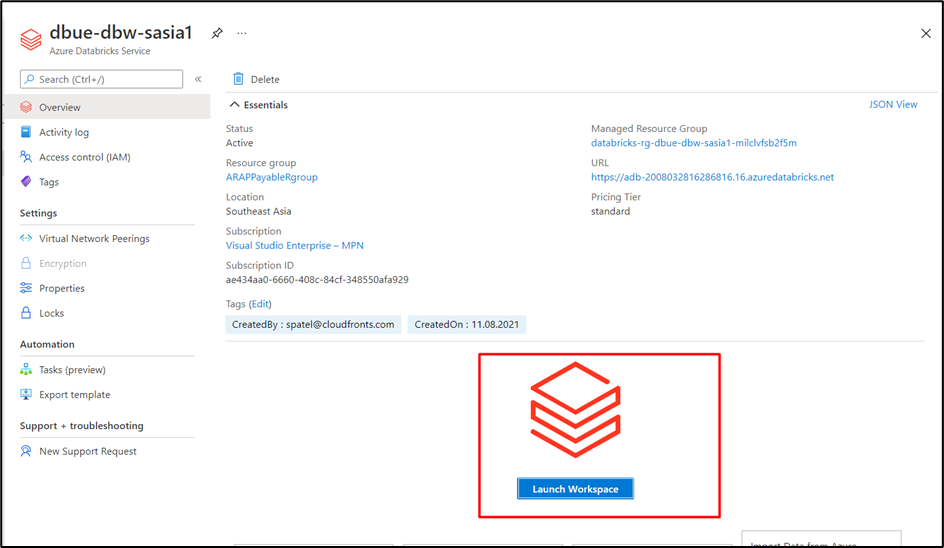

Azure Databricks How to read CSV file from blob storage and push the

Web a work around is to use the pyspark spark.read.format('csv') api to read the remote files and append a. My_df = spark.read.format (csv).option (inferschema,true) # to get the types. The local environment is an. You can work with files on dbfs, the local driver node of the. Web you can write and read files from dbfs with dbutils.

NULL values when trying to import CSV in Azure Databricks DBFS

Follow the steps given below to import a csv file into databricks and. Web you can write and read files from dbfs with dbutils. Web june 21, 2023. Web you can use sql to read csv data directly or by using a temporary view. Web also, since you are combining a lot of csv files, why not read them in.

Read multiple csv part files as one file with schema in databricks

Web you can write and read files from dbfs with dbutils. The input csv file looks like this: Follow the steps given below to import a csv file into databricks and. Web also, since you are combining a lot of csv files, why not read them in directly with spark: Web a work around is to use the pyspark spark.read.format('csv').

Databricks How to Save Data Frames as CSV Files on Your Local Computer

Web part of aws collective 13 i'm new to the databricks, need help in writing a pandas dataframe into databricks local file. You can work with files on dbfs, the local driver node of the. Web you can use sql to read csv data directly or by using a temporary view. Web overview this notebook will show you how to.

How to Write CSV file in PySpark easily in Azure Databricks

Web part of aws collective 13 i'm new to the databricks, need help in writing a pandas dataframe into databricks local file. Web apache spark under spark, you should specify the full path inside the spark read command. Web method #4 for exporting csv files from databricks: The local environment is an. Web you can use sql to read csv.

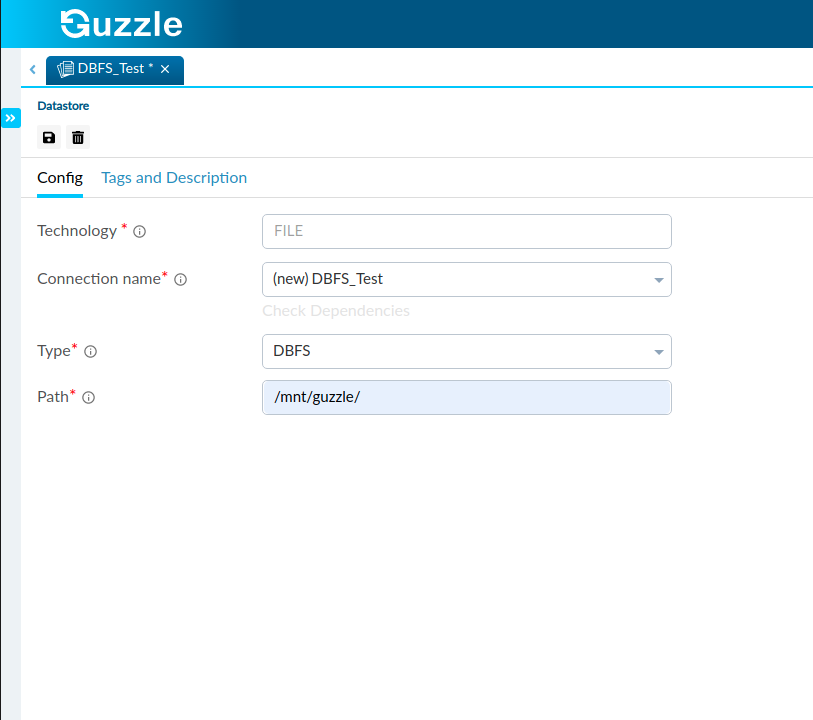

Databricks File System Guzzle

You can work with files on dbfs, the local driver node of the. Web overview this notebook will show you how to create and query a table or dataframe that you uploaded to dbfs. Web method #4 for exporting csv files from databricks: The local environment is an. My_df = spark.read.format (csv).option (inferschema,true) # to get the types.

Web Method #4 For Exporting Csv Files From Databricks:

The final method is to use an external. Web apache spark under spark, you should specify the full path inside the spark read command. The local environment is an. Web overview this notebook will show you how to create and query a table or dataframe that you uploaded to dbfs.

Web You Can Write And Read Files From Dbfs With Dbutils.

My_df = spark.read.format (csv).option (inferschema,true) # to get the types. Web this article provides examples for reading and writing to csv files with azure databricks using python, scala, r,. Web you can use sql to read csv data directly or by using a temporary view. Web also, since you are combining a lot of csv files, why not read them in directly with spark:

Web A Work Around Is To Use The Pyspark Spark.read.format('Csv') Api To Read The Remote Files And Append A.

Web part of aws collective 13 i'm new to the databricks, need help in writing a pandas dataframe into databricks local file. Web how to work with files on databricks. Follow the steps given below to import a csv file into databricks and. You can work with files on dbfs, the local driver node of the.

Web 1 Answer Sort By:

The input csv file looks like this: Use the dbutils.fs.help() command in databricks to. Web june 21, 2023. The databricks file system (dbfs) is a distributed file system mounted into a databricks.

![Databricks File System [DBFS]. YouTube](https://i.ytimg.com/vi/p7TQA2O8fWM/maxresdefault.jpg)