Pyspark Read Parquet File

Pyspark Read Parquet File - Web read parquet files in pyspark df = spark.read.format('parguet').load('filename.parquet'). Web i am writing a parquet file from a spark dataframe the following way: Web spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data. Web we have been concurrently developing the c++ implementation of apache parquet , which includes a native, multithreaded c++. Web dataframe.read.parquet function that reads content of parquet file using pyspark dataframe.write.parquet. Parquet is a columnar format that is supported by many other data processing systems. This will work from pyspark shell: Web introduction to pyspark read parquet. Web apache parquet is a columnar file format that provides optimizations to speed up queries and is a far more efficient file format than. Pyspark read.parquet is a method provided in pyspark to read the data from.

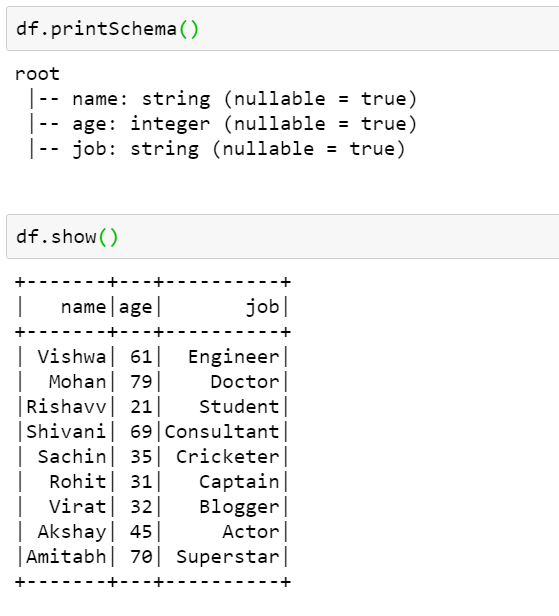

Pyspark read.parquet is a method provided in pyspark to read the data from. This will work from pyspark shell: Web i am writing a parquet file from a spark dataframe the following way: Web pyspark comes with the function read.parquet used to read these types of parquet files from the given file. Web i only want to read them at the sales level which should give me for all the regions and i've tried both of the below. Web load a parquet object from the file path, returning a dataframe. Web example of spark read & write parquet file in this tutorial, we will learn what is apache parquet?, it’s advantages and how to read. Web to save a pyspark dataframe to multiple parquet files with specific size, you can use the repartition method to split. Web spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data. Web apache parquet is a columnar file format that provides optimizations to speed up queries and is a far more efficient file format than.

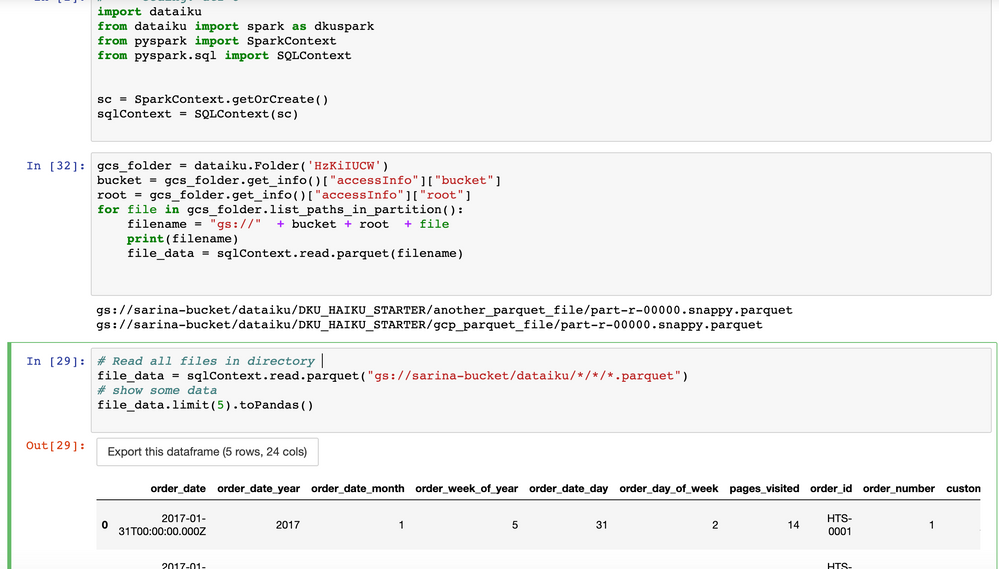

Parameters pathstring file path columnslist,. Web spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data. Web introduction to pyspark read parquet. Web you need to create an instance of sqlcontext first. Web dataframe.read.parquet function that reads content of parquet file using pyspark dataframe.write.parquet. Web i only want to read them at the sales level which should give me for all the regions and i've tried both of the below. Web pyspark provides a simple way to read parquet files using the read.parquet () method. Web to save a pyspark dataframe to multiple parquet files with specific size, you can use the repartition method to split. Write a dataframe into a parquet file and read it back. Use the write() method of the pyspark dataframewriter object to export pyspark dataframe to a.

PySpark Write Parquet Working of Write Parquet in PySpark

Web i only want to read them at the sales level which should give me for all the regions and i've tried both of the below. Web to save a pyspark dataframe to multiple parquet files with specific size, you can use the repartition method to split. Web spark sql provides support for both reading and writing parquet files that.

Nascosto Mattina Trapunta create parquet file whisky giocattolo Astrolabio

Web we have been concurrently developing the c++ implementation of apache parquet , which includes a native, multithreaded c++. Write a dataframe into a parquet file and read it back. Web pyspark comes with the function read.parquet used to read these types of parquet files from the given file. Web spark sql provides support for both reading and writing parquet.

How To Read Various File Formats In Pyspark Json Parquet Orc Avro Www

Pyspark read.parquet is a method provided in pyspark to read the data from. Web introduction to pyspark read parquet. Web you need to create an instance of sqlcontext first. Web apache parquet is a columnar file format that provides optimizations to speed up queries and is a far more efficient file format than. Web read parquet files in pyspark df.

Read Parquet File In Pyspark Dataframe news room

>>> import tempfile >>> with tempfile.temporarydirectory() as. Web i only want to read them at the sales level which should give me for all the regions and i've tried both of the below. Web pyspark provides a simple way to read parquet files using the read.parquet () method. Web we have been concurrently developing the c++ implementation of apache parquet.

PySpark Tutorial 9 PySpark Read Parquet File PySpark with Python

Web example of spark read & write parquet file in this tutorial, we will learn what is apache parquet?, it’s advantages and how to read. Web apache parquet is a columnar file format that provides optimizations to speed up queries and is a far more efficient file format than. Write pyspark to csv file. Web i only want to read.

Solved How to read parquet file from GCS using pyspark? Dataiku

Use the write() method of the pyspark dataframewriter object to export pyspark dataframe to a. Web pyspark provides a simple way to read parquet files using the read.parquet () method. >>> import tempfile >>> with tempfile.temporarydirectory() as. Web spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data. Web apache.

Read Parquet File In Pyspark Dataframe news room

Parameters pathstring file path columnslist,. Web i am writing a parquet file from a spark dataframe the following way: Write pyspark to csv file. Web to save a pyspark dataframe to multiple parquet files with specific size, you can use the repartition method to split. Web introduction to pyspark read parquet.

How To Read A Parquet File Using Pyspark Vrogue

Web you need to create an instance of sqlcontext first. Web example of spark read & write parquet file in this tutorial, we will learn what is apache parquet?, it’s advantages and how to read. Web we have been concurrently developing the c++ implementation of apache parquet , which includes a native, multithreaded c++. Web i am writing a parquet.

PySpark Read and Write Parquet File Spark by {Examples}

>>> import tempfile >>> with tempfile.temporarydirectory() as. Web introduction to pyspark read parquet. Parameters pathstring file path columnslist,. Use the write() method of the pyspark dataframewriter object to export pyspark dataframe to a. Web i am writing a parquet file from a spark dataframe the following way:

How To Read A Parquet File Using Pyspark Vrogue

Web to save a pyspark dataframe to multiple parquet files with specific size, you can use the repartition method to split. Web i am writing a parquet file from a spark dataframe the following way: Web i only want to read them at the sales level which should give me for all the regions and i've tried both of the.

Write A Dataframe Into A Parquet File And Read It Back.

Web pyspark provides a simple way to read parquet files using the read.parquet () method. Web to save a pyspark dataframe to multiple parquet files with specific size, you can use the repartition method to split. Web introduction to pyspark read parquet. Web we have been concurrently developing the c++ implementation of apache parquet , which includes a native, multithreaded c++.

This Will Work From Pyspark Shell:

Web pyspark comes with the function read.parquet used to read these types of parquet files from the given file. >>> import tempfile >>> with tempfile.temporarydirectory() as. Use the write() method of the pyspark dataframewriter object to export pyspark dataframe to a. Web read parquet files in pyspark df = spark.read.format('parguet').load('filename.parquet').

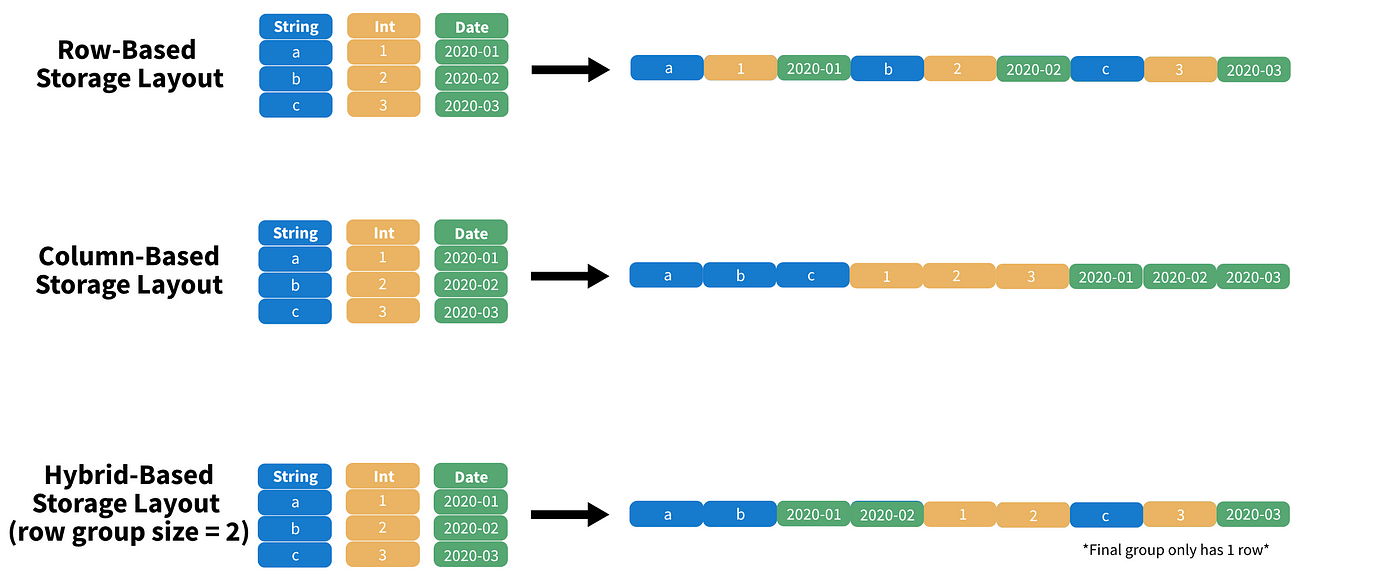

Parquet Is A Columnar Format That Is Supported By Many Other Data Processing Systems.

Web you need to create an instance of sqlcontext first. Web spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data. Parameters pathstring file path columnslist,. Web example of spark read & write parquet file in this tutorial, we will learn what is apache parquet?, it’s advantages and how to read.

Web Load A Parquet Object From The File Path, Returning A Dataframe.

Web apache parquet is a columnar file format that provides optimizations to speed up queries and is a far more efficient file format than. Pyspark read.parquet is a method provided in pyspark to read the data from. Write pyspark to csv file. Web dataframe.read.parquet function that reads content of parquet file using pyspark dataframe.write.parquet.