Spark Read Table

Spark Read Table - This includes reading from a table, loading data from files, and operations that transform data. Index_colstr or list of str, optional, default: Loading data from an autonomous database at the root compartment: In this article, we are going to learn about reading data from sql tables in spark. Web spark sql provides spark.read ().csv (file_name) to read a file or directory of files in csv format into spark dataframe, and dataframe.write ().csv (path) to write to a. Reads from a spark table into a spark dataframe. Spark sql also supports reading and writing data stored in apache hive. In the simplest form, the default data source ( parquet. Often we have to connect spark to one of the relational database and process that data. Many systems store their data in rdbms.

Web reads from a spark table into a spark dataframe. Union [str, list [str], none] = none) → pyspark.pandas.frame.dataframe [source] ¶. Interacting with different versions of hive metastore; Specifying storage format for hive tables; Spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data. Web read data from azure sql database write data into azure sql database show 2 more learn how to connect an apache spark cluster in azure hdinsight with azure sql database. The spark catalog is not getting refreshed with the new data inserted into the external hive table. Web parquet is a columnar format that is supported by many other data processing systems. In the simplest form, the default data source ( parquet. Web example code for spark oracle datasource with java.

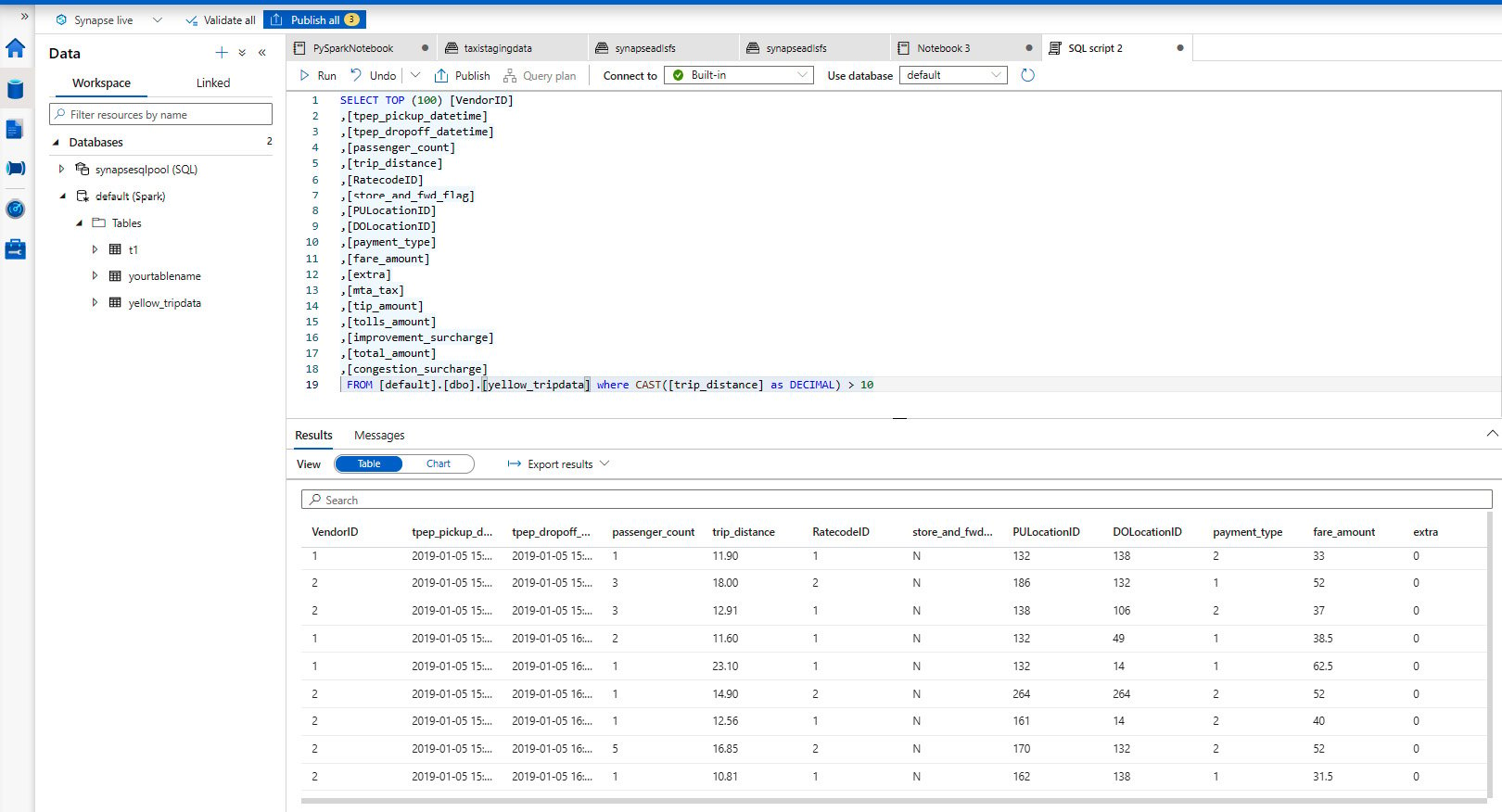

Web spark filter () or where () function is used to filter the rows from dataframe or dataset based on the given one or multiple conditions or sql expression. That's one of the big. Read a spark table and return a dataframe. In order to connect to mysql server from apache spark… Many systems store their data in rdbms. Spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data. Dataset oracledf = spark.read ().format (oracle… Interacting with different versions of hive metastore; Web aug 21, 2023. Loading data from an autonomous database at the root compartment:

The Spark Table Curved End Table or Night Stand dust furniture*

Web aug 21, 2023. However, since hive has a large number of dependencies, these dependencies are not included in the default spark. You can also create a spark dataframe from a list or a. You can use where () operator instead of the filter if you are. // note you don't have to provide driver class name and jdbc url.

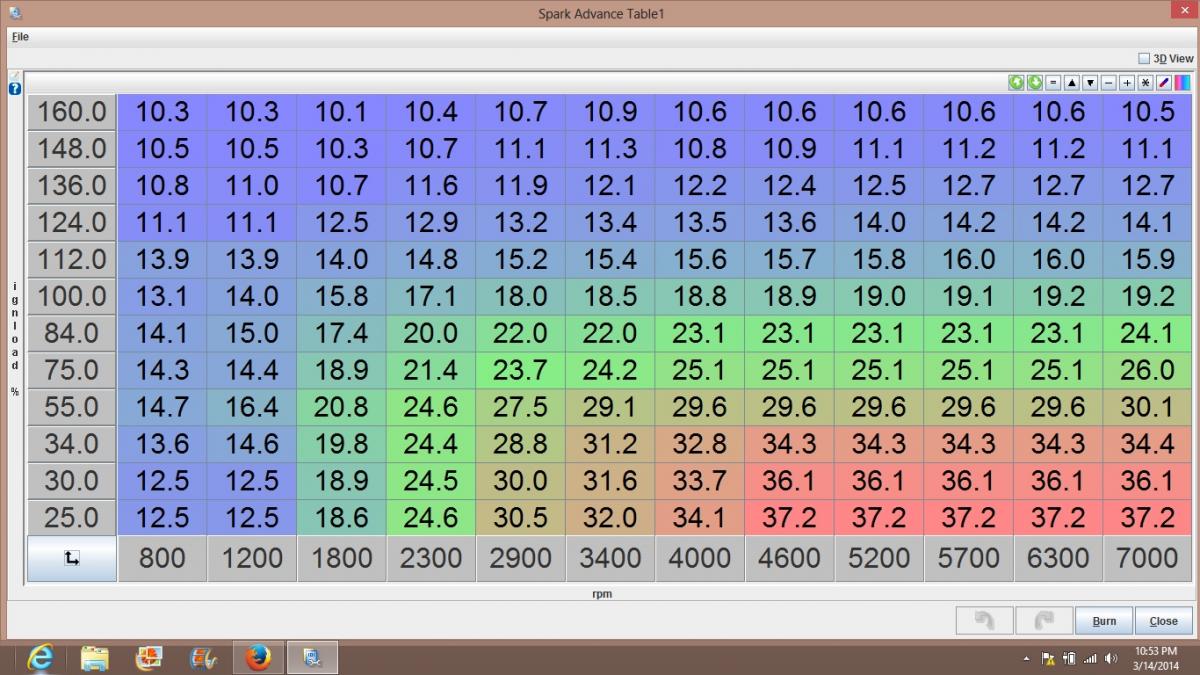

Spark Plug Reading 101 Don’t Leave HP On The Table!

Spark sql provides support for both reading and writing parquet files that automatically preserves the schema of the original data. Loading data from an autonomous database at the root compartment: Run sql on files directly. You can easily load tables to dataframes, such as in the following example: Reading tables and filtering by partition ask question asked 3 years, 9.

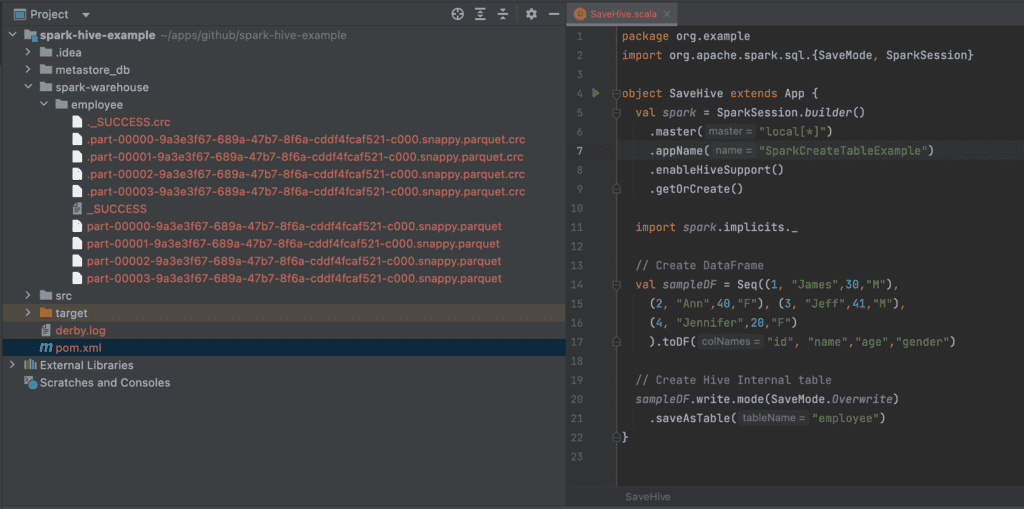

Spark SQL Tutorial 2 How to Create Spark Table In Databricks

Reads from a spark table into a spark dataframe. Many systems store their data in rdbms. Web example code for spark oracle datasource with java. Web spark filter () or where () function is used to filter the rows from dataframe or dataset based on the given one or multiple conditions or sql expression. Web the scala interface for spark.

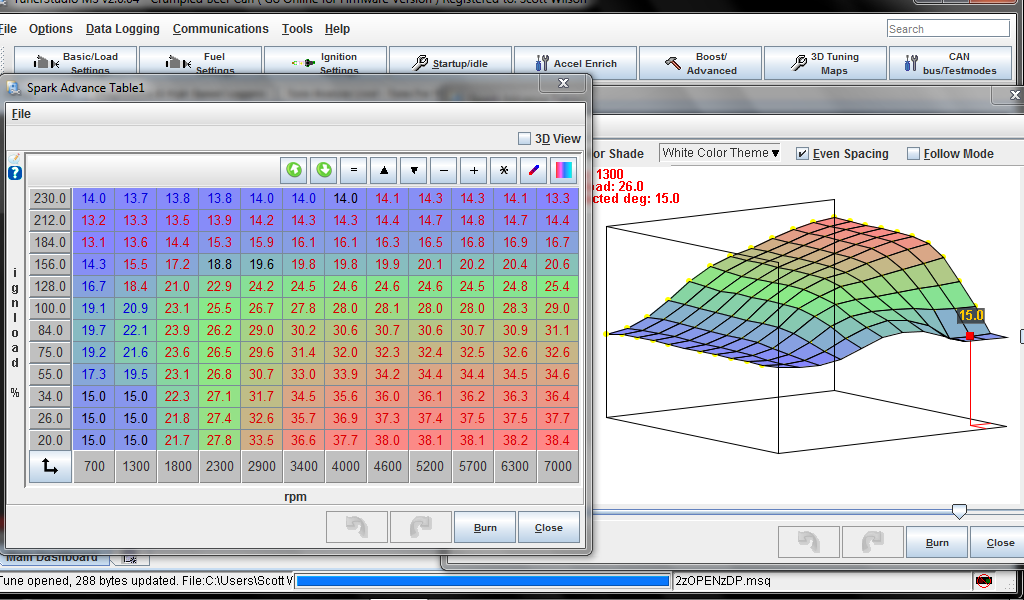

Spark Table Miata Turbo Forum Boost cars, acquire cats.

Web this is done by setting spark.sql.hive.convertmetastoreorc or spark.sql.hive.convertmetastoreparquet to false. Dataset oracledf = spark.read ().format (oracle… Web spark sql provides spark.read ().csv (file_name) to read a file or directory of files in csv format into spark dataframe, and dataframe.write ().csv (path) to write to a. Reading tables and filtering by partition ask question asked 3 years, 9 months ago.

Spark Plug Reading 101 Don’t Leave HP On The Table! Hot Rod Network

Web read a table into a dataframe. Web reads from a spark table into a spark dataframe. This includes reading from a table, loading data from files, and operations that transform data. Specifying storage format for hive tables; Web the core syntax for reading data in apache spark dataframereader.format(…).option(“key”, “value”).schema(…).load() dataframereader is the foundation for reading data in spark, it.

My spark table. Miata Turbo Forum Boost cars, acquire cats.

There is a table table_name which is partitioned by partition_column. Many systems store their data in rdbms. Web read data from azure sql database write data into azure sql database show 2 more learn how to connect an apache spark cluster in azure hdinsight with azure sql database. You can also create a spark dataframe from a list or a..

Spark SQL Read Hive Table Spark By {Examples}

We have a streaming job that gets some info from a kafka topic and queries the hive table. Web reading data from sql tables in spark by mahesh mogal sql databases or relational databases are around for decads now. In the simplest form, the default data source ( parquet. // loading data from autonomous database at root compartment. This includes.

Spark Essentials — How to Read and Write Data With PySpark Reading

Read a spark table and return a dataframe. Reading tables and filtering by partition ask question asked 3 years, 9 months ago modified 3 years, 9 months ago viewed 3k times 2 i'm trying to understand spark's evaluation. For instructions on creating a cluster, see the dataproc quickstarts. Loading data from an autonomous database at the root compartment: Web spark.

Spark Plug Reading 101 Don’t Leave HP On The Table! Hot Rod Network

Web reading data from sql tables in spark by mahesh mogal sql databases or relational databases are around for decads now. Often we have to connect spark to one of the relational database and process that data. // loading data from autonomous database at root compartment. // note you don't have to provide driver class name and jdbc url. You.

Reading and writing data from ADLS Gen2 using PySpark Azure Synapse

// loading data from autonomous database at root compartment. Web this is done by setting spark.sql.hive.convertmetastoreorc or spark.sql.hive.convertmetastoreparquet to false. In order to connect to mysql server from apache spark… Run sql on files directly. Dataset oracledf = spark.read ().format (oracle…

Web Spark Filter () Or Where () Function Is Used To Filter The Rows From Dataframe Or Dataset Based On The Given One Or Multiple Conditions Or Sql Expression.

Web example code for spark oracle datasource with java. This includes reading from a table, loading data from files, and operations that transform data. In this article, we are going to learn about reading data from sql tables in spark. Union [str, list [str], none] = none) → pyspark.pandas.frame.dataframe [source] ¶.

Web The Scala Interface For Spark Sql Supports Automatically Converting An Rdd Containing Case Classes To A Dataframe.

The case class defines the schema of the table. Reading tables and filtering by partition ask question asked 3 years, 9 months ago modified 3 years, 9 months ago viewed 3k times 2 i'm trying to understand spark's evaluation. You can use where () operator instead of the filter if you are. In the simplest form, the default data source ( parquet.

Spark Sql Provides Support For Both Reading And Writing Parquet Files That Automatically Preserves The Schema Of The Original Data.

The names of the arguments to the case class. Specifying storage format for hive tables; Web reads from a spark table into a spark dataframe. Web spark.read.table function is available in package org.apache.spark.sql.dataframereader & it is again calling spark.table function.

Index_Colstr Or List Of Str, Optional, Default:

You can also create a spark dataframe from a list or a. Spark sql also supports reading and writing data stored in apache hive. Loading data from an autonomous database at the root compartment: Web most apache spark queries return a dataframe.